Chapter 1 - Historical development and related work

I have had my results for a long time: but I do not yet know how I am to arrive at them.

Karl Friedrich Gauss

In 2003 a study was conducted [lyman03howmuch] that tried to estimate how much new information is created each year. The conclusion was that the world produces between 1 and 2 exabytes of unique information each year, roughly about 250 megabytes for each human being. This amount of information affects most of us in our daily life as we constantly find ourselves overwhelmed by it in many different forms. Think of all TV programs there are to choose among. Which news stories are worth paying attention to? Look at all the new email that keeps on arriving to the inbox? Which restaurant to eat lunch at? And what to eat at the restaurant? Which is the right gift? Which is the right movie to rent? What book, cd or movie to buy from Amazon? A commonly used term for these problems is information overload , something that will happen to all of us sooner or later.

Since ninety-two percent of all the information is stored on magnetic media, primarily hard disks, the research and development of computerized tools for storing, organizing and searching information is highly needed, and indeed, in the field of information retrieval and information filtering , this has been an issue of interest for more than half a century. Applications have been developed in both fields that are suited for different data domains. In the field of information retrieval applications such as search engines for the Web and text-retrieval applications that searches large text-document collections have been developed. In the field of information filtering we have spam filters that filters incoming mail and makes sure no unwanted mail arrives in our mailbox. And from both fields we have recommender systems that retrieve and filter information by figuring out what our needs are, and uses this knowledge to recommend the information that we will probably have most use of.

Information Retrieval

One definition of information retrieval can be found in the book "Information retrieval" by Rijsbergen [vanrijsbergen79information]

"An information retrieval system does not inform (i.e. change the knowledge of) the user on the subject of his inquiry. It merely informs on the existence (or non-existence) and whereabouts of documents relating to his request."

In its basic form, information retrieval is concerned with finding text-documents that match some search criteria. In a typical information retrieval system the user types in a few keywords, a search query, that best describe the wanted information, the information retrieval system then finds this information by matching the keywords against the contents of information stored as textual data. Examples of information retrieval systems are web search engines like Google [brin98anatomy] that matches search queries against the textual contents of web documents, and returns the documents that best match the keywords, usually sorted according to how much they overlap with the search query and by their link structure. Text and document retrieval applications are by far the most popular use of information retrieval systems. One drawback with the query style for retrieving information is that the users have to type in a new question every time they want to know if new information is available for a subject of interest.

Pioneering work on this matter was done in the late 50's by H.P. Luhn, who was the first to automate the process of information retrieval. The purpose of his work was to make it easier for readers of technical literature to quickly and accurately identify the topic of a paper. This was done by first making the documents machine-readable, using punch-cards, and then using statistical software to calculate word frequencies in order to find significant words and sentences that could be used for abstract creation [luhn58automatic] . In his paper "A Business Intelligence System" [luhn58business] , he also describes a system that manages user profiles that consists of the users interests coded as keywords. The system retrieves and stores information that matches the user profile by comparing the user profile against a database of documents. This approach is similar to what later on will be referred to as information filtering .

Beginning in the 1960's a lot of research was done in the field of information retrieval. One of the major researchers in this field was Gerald Salton, who was the lead researcher of a project called the SMART information retrieval system [salton71smart] . Important concepts like the vector space model , term-weighting and relevance feedback was introduced and developed as a part of this research. The system itself was an experimental text-retrieval application that allowed researchers to experiment and evaluate new approaches in information retrieval. The applications that were developed during these years were proven to perform well on relatively small text collections (on the order of thousands of documents) but whether or not they would scale to larger text collections wasn't clear until after the Text REtrieval Conference (TREC)(*) in 1992. The purpose of TREC was to support research within the information retrieval community by providing the infrastructure necessary for large-scale evaluation of text retrieval methodologies. TREC resulted in large text collections being made available for researchers. TREC is co-sponsored by the National Institute of Standards and Technology and U.S. Department of Defence and is now an yearly event. With the distribution of a gigabyte of text with hundreds of queries, the performance of the retrieval systems could now be judged by their actual performance. New and improved applications could, thanks to this, be developed that were suitable for large text collections. For example, in the early days of the Web, searching the Web was done by using algorithms that had been developed for searching large text collections [singhal01modern] .

The field of information retrieval is still even after nearly fifty years of research a very active and important research field due to the increasing amount of information being generated - not in the least due to the introduction of the World Wide Web in the beginning of the 1990's. The research area has gone from its primary goal of indexing text and searching for useful documents in text collections to include research on modelling, document classification and categorization, systems architecture, user interfaces, data visualization, filtering, language analysis, etc. Some modern applications of information retrieval are search-engines, bibliographic systems and digital libraries. See for example the book "Modern Information Retrieval" [baeza-yates99modern] for a overview of the research in information retrieval with a focus on the algorithms and techniques used in information retrieval systems.

Information Filtering

Today's recommender systems have their origin in the field of information filtering, a term coined in 1982 by Peter J. Denning in his ACM president's letter titled "Electronic junk" [denning82electronic] . In the paper Denning writes that:

"The visibility of personal computers, individual workstations, and local area networks has focused most of the attention on generating information -- the process of producing documents and disseminating them. It is now time to focus more attention on receiving information-- the process of controlling and filtering information that reaches the persons who must use it."

Dennings describes one approach for how to deal with the problem of filtering information being received, called content filters. He describes content filters as a process by which for example the contents of emails are scanned in order to decide what to do with them; discard them or forward them. He ends the letter with a open request that something must be done about the growing problem of how to handle the quantity of information that we produce.

The 1970's was a big decade when it came to databases, which in combination with the falling prices of storage space led to an increasing amount of digital information being available in the 80's. There was also an increasing availability of computers as well as an increasing usage of computer networks to disseminate information. It was probably the beginning of these trends that Dennings had observed and which led to his request for more effective filtering techniques. As a result, there was an increased activity in the research of filtering systems during the 80's and several papers were published on the subject, the paper by Malone et al. [malone87communication] published in 1987 being one of the more influential ones. Their paper discusses three paradigms for information filtering called cognitive , economic and social . Their definition of cognitive filtering is equivalent to the content filter defined by Dennings and is now commonly referred to as content based filtering. Economic filtering is based on the fact that a user often has to make a cost-versus-value decision when it comes to processing information, e.g. "is it worth my time to read this document or email". Social filtering is defined as a process where users annotate documents they have read and where the annotations are then used to represent the documents. Users decide, based on the annotations, if the documents are worth reading. They thought that, if practical, this approach could be used on items regardless of whether or not the contents of the item can be represented in a way suitable for content filtering.

In November 1991, Bellcore hosted a Workshop on High Performance Information Filtering. The workshop brought together researchers with interests in the creation of large-scaled personalized information delivery systems and covered various aspects of this area and its relation to information retrieval. The workshop resulted in a special issue on information filtering in Communications of the ACM [cacm92communications] . One of the articles in the special issue was written by Belkin and Croft [belkin92information] , in it they discuss the difference between information filtering and information retrieval. They come to the conclusion that both techniques are very similar on an abstract level and has the same goal, i.e. to provide relevant information to the user, but define some differences that are significant for information systems; filter systems are suitable for long-termed user-profiles on dynamical data whereas retrieval systems are suitable for short-termed user profiles on static data. The traditional way of retrieving information is to ask a query (short-termed) that is matched against available information. A system can however also build up a user profile (long-termed) which is used in connection with every query, this means that personalization can be done, each answer is adjusted to the users preferences as well as the actual query. The other articles in the special issue give examples for applications of information filtering and extensions to information retrieval.

Collaborative Filtering

Remember to always be yourself. Unless you suck.

Joss Whedon

One article [goldberg92tapestry] in the special issue on information filtering in Communications of the ACM [cacm92communications] took a different approach to information filtering. They implemented their own version of social filtering in a system called Tapestry. Tapestry allowed a small community of users to add annotations such as ratings and text comments to documents they had read. Users could then create queries like "receive all documents that Giles likes". The system relied on the users all knowing each other, so that users could judge from a personal basis or by reputation if the reviewer of a document was trustworthy or not. Two major drawbacks with the system was that it did not scale well to larger user groups (as everybody had to know each other), and the filtering process had to be done manually by the users (the formulation of specific queries). They chose to call their approach collaborative filtering , which also became the name for a new direction in the research area of information filtering.

The new research direction resulted in further research into collaborative filtering, and the development of new collaborative filtering systems, where GroupLens [resnick94grouplens] for Usenet News, Ringo [shardanand95social] for music and video@bellacore [hill95recommending] for movies are among the more notable ones. The main contributions of these new systems were to automate the filtering process of Tapestry and make it more scaleable. By letting users be represented by their ratings on items, the systems could automatically find other users that had a similar type of rating behavior as the active user. Users with similar rating behavior could then become recommenders for the active user on items they had rated and which the active user hadn't rated. This approach was based on the heuristic that users that have agreed in the past probably will agree in the future . As users were no longer required to all know each other the former scalability issue of Tapestry was solved. In other words, the users that were found similar to you would take on the same role as the trusted well-known reviewer in the Tapestry system, with the difference that now you didn't know who the reviewers were, the trust was instead put on the system to find them.

Motivated by the research done into collaborative filtering since the 1991 workshop where Tapestry was presented, a new workshop was held in 1996 by Berkley, this time the topic was collaborative filtering. The workshop resulted in a special March issue of 1997's Communications of the ACM [cacm97communications] , covering the new research into collaborative filtering. It was this year that collaborative filtering got its real major breakthrough in the research community at large and the term recommender system got coined.

While the automated collaborative filtering systems had solved the scalability issue of Tapestry, now allowing a theoretically unlimited number of users that didn't need to know each other, a new scalability issue had manifested. The increasing number of users required an increasing amount of memory and computation time. Breese suggested in [breese98empirical] a model based approach to CF, and therefore decided to divide CF techniques into two classes, memory based collaborative filtering and model based collaborative filtering .

Memory based collaborative filtering techniques were to correspond to the earlier automated CF techniques that required that the entire database of user's ratings on items was kept in memory during the recommendation process. Since the entire rating database is kept in memory, new ratings can immediately be taken into account as they become available. Recommendations for the active user can be produced using variations of nearest neighbor algorithms, memory based CF is for this reason sometimes referred to as neighborhood based CF . These algorithms utilize the whole rating database, to find neighbors to the active user, by calculating similarity between the active user and all the other users in the rating database based on how they have rated items. Users that are found to be similar to the active user are then used as recommenders in the recommendation process. The major drawback with memory based CF techniques is as already noted that they tend to scale very poorly, larger rating databases require more memory and more calculations which slow down the recommendation process.

Model based collaborative filtering techniques, Breese suggested, will on the other hand only use the rating database to construct a model (hence the name) that can be used to make recommendations. The construction of such a model is primarily based on the rating database, however once the model is created the rating database is no longer needed. It is thus not necessary to keep the huge rating database in memory, which typically should improve the performance and scalability issues. But since it is just a model, it may (depending on the model) be necessary to reconstruct it as more ratings become available in order to accurately model the relations of users and items in the rating database. The need to reconstruct the model can be a significant drawback with this approach.

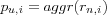

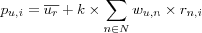

Breese proposes in [breese98empirical] a probabilistic perspective to model based CF, where calculating the predicted value pu, i for an item i for the active user u can be viewed as calculating the active user's expected rating ru, i on the active item, given what we know about the active user. The information available about the active user is the set ur of all ratings given by active user. Assuming ratings to be in the interval ![[R_{min},R_{max}]](image/math0.png) the following formula could then be used to calculate the predicted value:

the following formula could then be used to calculate the predicted value:

![p_{u,i} =

E \left( r_{u,i} \right) =

\sum_{v=R_{min}}^{R_{max}} \left[ v \times P \left( r_{u,i} = v | u_r \right) \right]](image/equation0.png)

Where P is the conditional probability that the active user will give the active item a particular rating. To estimate the probability P , Breese proposes two alternative probabilistic models: Bayesian Networks , where each item is represented as a node in a network and the states of the nodes correspond to the possible ratings for each item; and Bayesian clustering where the idea is that there are certain groups or types of users capturing a common set of preferences and tastes, as such user clusters are formed, and the probability that the active user belongs to each of them is estimated, using the estimated class membership probabilities predictions can be calculated.

Several other probabilistic models have been proposed in the literature, some more advanced than others, such as the work of [shani02mdp] where they see the recommendation process as a sequential decision process and proposes the use of Markov decision chains to create a model. However, they don't report any better accuracy than the models proposed by Breese. Many other non-probabilistic model based CF techniques have been suggested, such as the machine learning techniques found in [billsus98learning] , where artificial neural networks are used together with feature extraction techniques like singular value decomposition to build prediction models. They claim a slightly better result than the correlation based techniques proposed earlier by e.g. GroupLens [resnick94grouplens] .

In 1999 Herlocker and others [herlocker99framework] summarizes what can be referred to as the state of the art of CF. The automated CF problem space is formulated as predicting how well a user will like an item that the user has not yet rated given the ratings for a community of users . An alternative problem space definition is also given where users and items are represented as a matrix where the cells consist of ratings given by the users on the items, the collaborative filtering recommendation problem can then be formulated as predicting the missing ratings in the user item rating matrix [ucf rating matrix] . The most prevalent algorithms used by collaborative filtering techniques are considered to be neighborhood based (which is still true). A framework for performing collaborative filtering using neighborhood based methods is presented and discussed in detail. An important and still widely used extension to neighborhood based collaborative filtering methods called significance weighting is introduced, where the similarity between users is down weighted according to number of co-rated items.

An alternative approach to collaborative filtering is proposed in [sarwar01itembased] where the relationship between items, instead of users, is used. Sarwar et. al developed a new technique that they chose to call item based collaborative filtering , in contrast to the techniques described by [herlocker99framework] which they refer to as user based collaborative filtering . Recommendations are based on what the active user thinks of items similar to the active item, instead of what the neighbors of the active user thinks of the active item [icf rating matrix] . The similarity between items is essentially based on the heuristic that a person's opinion on two items that are similar doesn't vary much .

Many may consider [sarwar01itembased] to be the first time an item based CF technique is described in a public paper, however as early as 1995 a similar approach was described in [shardanand95social] in form of what they called the artist artist algorithm , where they used the similarities between artists to make recommendations. The artist similarities were based on user ratings. However, they reported worse results compared to their other user based algorithm. No references is made to the scalability problem so this was probably just a test to see how well the approach stood up to their user based CF technique

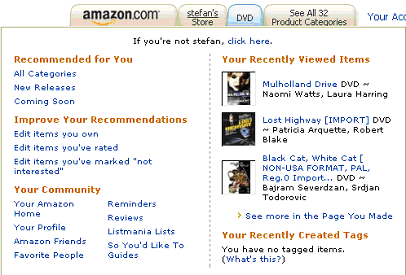

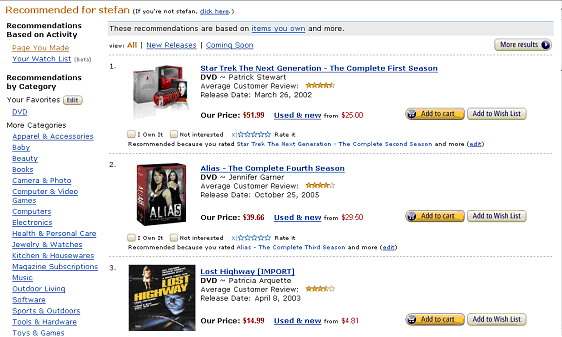

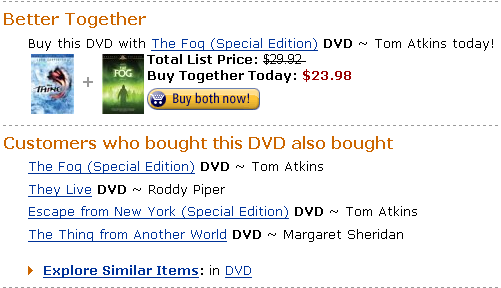

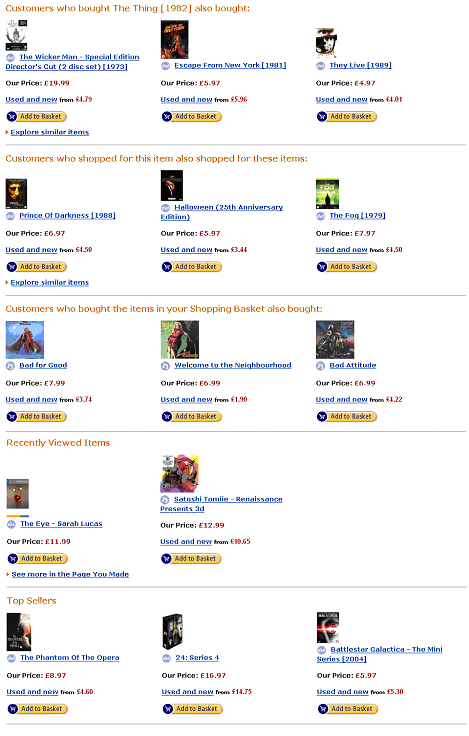

A few years later in the paper [linden03amazon] a description is given of the CF technique used byAmazon(*) , who also holds a patent for the technique they use. A brief and not very detailed description of an item based CF technique that reminds a lot of the one described in [sarwar01itembased] is given.

The technique presented in [sarwar01itembased] has been further extended in [karypis04itembased] for top n item recommendations . They propose an alternative algorithm called item set to item CF where the difference lies in that the item similarity is computed between a single item and a set of items instead of between two single items.

The empirical results for item based CF shown in [sarwar01itembased] show that a model that only uses the 25 most similar items for each item will produce recommendations that are nearly 96% correct compared to a full model that uses all item similarities. Both [sarwar01itembased] and [karypis04itembased] show comparable results against user based CF, but with a much faster computation time and no scalability problems in relation to the size of the user item rating matrix, allowing for better scalability to systems with millions of users and items.

Recommendation techniques

Two prevalent techniques based on the CF recommendation approach that have emerged over the years are user based CF as described in [resnick94grouplens] , [shardanand95social] , [herlocker99framework] and item based CF as described in [shardanand95social] , [sarwar01itembased] , [linden03amazon] , [karypis04itembased] . While both techniques rely on the CF approach where a large group of users ratings are used as a basis to make recommendations, the techniques implement the CF approach in two very distinct ways. The names of the techniques vary greatly among papers ( user based , user to user , neighborhood based , sometimes more specific such as artist to artist etc.), in this paper we have chosen to use the names user based CF and item based CF based on the historical development of CF and the distinctions necessary to make and will use them throughout this paper.

User based Collaborative Filtering

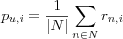

User based CF is a memory based technique for making recommendations where the main task is to find users that are similar to an active user. The users similar to the active user, usually referred to as the active user's neighbors , are used as recommenders for the active user in the recommendation process.

In [herlocker99framework] a framework is presented for performing user based CF (referred to as neighborhood based CF in the paper). The framework presented by Herlocker assumes users have been represented using their ratings on items, and instead focuses on the neighborhood formation and the prediction computation. Herlocker's framework, with additional explanations is as follows:

Weigh all users with respect to their similarity with the active user. Define a similarity function similarity(u, n) that measures the similarity between the active user u and a neighbor n (since user's are represented using their ratings profiles consisting of ratings on items, similarity is computed based on the users rating profiles). The more similar a neighbor is to the active user, the more weight is put on his influence on the final recommendation. One should observe that the introduction of a similarity measure as a weight value is an heuristic artifact that is used only for the purpose of differentiating between levels of user similarity and at the same time to simplify the rating estimation. Any similarity function can be used as long as the calculation is normalized (i.e. similarities lie in a expected interval, which typically is [0, 1] or [-1, 1] ).

Select a subset of users to use as recommenders. The subset of users (the relevant neighbors of the active user) can be selected in many different ways. Simple ways for selecting neighbors is to choose those that have a similarity above a fixed threshold value , or to choose the k most similar users according to calculated similarity or according to number of commonly rated items. While theoretically all users could be used given that similarities have been calculated, selection of a subset is often necessary practically. For example, similarities may be so unreliable that using a subset of the most trusted similarities is highly necessary to get good recommendations.

Compute predictions using a weighted combination of selected neighbors' ratings. The predicted value pa, i for the active item i that the active user u has not yet rated is computed as an aggregation of the rating of each neighbor n for the same item i , i.e.

.

.

The user based CF recommendation technique and the proposed more detailed framework thus leaves open the selection of similarity measure, the method for forming neighborhoods and the definition of the  function for making predictions. Much research has been done on possible ways of implementing and varying these parts of the user based CF technique.

function for making predictions. Much research has been done on possible ways of implementing and varying these parts of the user based CF technique.

In general the problem solved by the user based CF technique can be defined as predicting the missing values in a (typically very large and very sparse) user item rating matrix [herlocker99framework] , where each row represents a user and each column represents an item. For example the user item rating matrix given in [ucf rating matrix] shows that Giles hasn't seen the movie Kill Bill.

| The Thing | Land of the Dead | Magnolia | Kill Bill | |

| Giles | 5 | 4 | 1 | ? |

| Buffy | 5 | 5 | - | 5 |

| Spike | 5 | 1 | - | 1 |

| Snyder | 3 | 3 | 4 | - |

ucf rating matrix User item rating matrix where items are movies and cells thus consists of user ratings on movies. Shadowed cells shows which ratings are used when comparing Giles's row of ratings to Buffy's row of ratings. Only movies rated by both users can be considered.

Whom of the other users should Giles trust to make the recommendation? By studying how well Giles's row of ratings in the matrix matches Buffy's, Spike's and Snyder's row respectively, it can be determined who should be trusted, and even to which degree they can be trusted. Trust is in this case simply based on the heuristic that users that have agreed in the past probably will agree in the future , as such Buffy, who's row of ratings is most similar to Giles's row of ratings, should be trusted the most. Spike is difficult to trust as he agrees and disagrees sometimes, and Snyder can't be trusted at all, which doesn't matter however as Snyder hasn't seen Kill Bill and thus can't predict on it for Giles.

Item based Collaborative Filtering

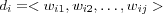

Item based collaborative filtering is a model based technique for making recommendations where the main task is to find items that are similar to an active item. The populations ratings on items is used to determine item similarity. Recommendations are formed by considering the active users ratings on items similar to the active item, usually refered to as the active items neighbors (to be clear, the neighbors in item based CF are items, not users). It is assumed that items have been represented using the users' ratings on the items. The recommendation technique then consists of the following three major steps:

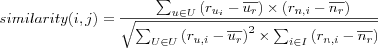

Weigh all items with respect to their similarity to the active item. Define a similarity function similarity(i, j) that meassures the similarity between the active item i and another item j (since item's are represented using rating profiles consisting of users ratings on the item in question, the similarity is computed based on the items rating profiles).

Form the active item's neighborhood of similar items. The neighborhood can either be such that it consists of the most similar items out of all items, independent of wheter the active user has rated them or not, or it can be the set of the most similar items out of those the active user has rated. Typically only a subset of the k most similar items are selected.

Compute a prediction on the active item for the active user using a prediction algorithm that is based on how the active user has rated items in the active item's neighborhood .

The main motivation behind this approach is to overcome some of the problems with scalability and computational performance that are symptomatic for user based CF.

Similar to user based CF the item based CF technique can be defined as predicting the missing values in a user item rating matrix. In [sarwar01itembased] it is proposed that the relations between columns in a user item rating matrix should be considered. Thus, while the problem space is still that of predicting the missing values in a user item rating matrix as defined in [herlocker99framework] , relations between items instead of users is now considered. For example the user item rating matrix given in [icf rating matrix] shows that Giles hasn't seen Kill Bill.

| The Thing | Land of the Dead | Magnolia | Kill Bill | |

| Giles | 5 | 4 | 1 | ? |

| Buffy | 5 | 5 | - | 5 |

| Spike | 5 | 1 | - | 1 |

| Snyder | 3 | 3 | 4 | - |

icf rating matrix User item rating matrix where items are movies and cells thus consists of user ratings on movies. Shadowed cells shows which ratings are used when comparing the movie Land of the Dead's column of ratings to the movie Kill Bill's column of ratings, only ratings by users that have rated both movies are considered.

When predicting the missing rating on Kill Bill for Giles in this case, the prediction will be based on what Giles thinks of movies that are similar to Kill Bill. The similarity between movies is found by matching the column vector of Kill Bill against the column vector of the other three movies. The similarity between items is essentially based on the heuristic that a persons opinion on items that are similar doesn't vary much . As such, since ratings on Kill Bill and Land of the Dead varies the least, among users that have rated both movies, they can be considered similar (and I think we can all agree that both movies have a lot of death , serendipity!). Since Giles thought Land of the Dead was a 4 that might thus be a suitable prediction on Kill Bill for Giles.

Similarity measures

Prevalent throughout the research into collaborative filtering has been the study of similarity measures to use to determine user (and item) similarities. The two most popular types of similarity measures are correlation based and cosine based . In [resnick94grouplens] they suggested the use of the Pearson correlation coefficient as a correlation based similarity measure. [breese98empirical] compared recommendation techniques using the Pearson correlation coefficient and the cosine angle between vectors as similarity measures, the recommendation technique using the Pearson correlation coefficient was found to perform better. The Ringo system [shardanand95social] made use of the mean squared difference and a constrained version of the Pearson correlation to measure the similarity between two users. Their results show that the constrained Pearson performs better compared to the Pearson correlation coefficient and the mean squared difference.

In [billsus98learning] some important issues regarding ( linear ) correlation-based approaches are discussed, which we refer to as the reliability , generalization and transitivity issues.

- Reliability

The correlation between two users is calculated only over items both have rated. Since users can choose among thousands of items to rate, it is likely that the overlap between most users will be small. This has the effect that the use of the correlation coefficient between two users as a similarity measure is in many cases unreliable. For example, correlations based on three items has as much influence in the final prediction as a correlation based on thirty items. It is thus important to somehow take into account the number of items involved in the correlation, a popular solution to this is the usage of significance weighting [herlocker99framework] .

- Generalization

The correlation based methods typically uses a generalized global model of similarity between users, rather than separate specific models for classes of ratings. For example, referring to [generalization rating matrix] where a rating scale of 1 to 5 is used, we see that the two users agree on two movies and disagree on the remaining movies.

The Thing Grudge Andrey Rublyvov Fargo Magnolia Giles 5 5 1 2 1 Spike 1 2 1 2 2 generalization rating matrix User item rating matrix consisting of two users. Note that when Giles rates something highly (5), Spike gives the same item a low rating (1 or 2). But when Spike gives an item a low rating (1 or 2) there's no pattern for how Giles will rate the same item. The users have a low linear correlation, as such their influence on each other will be low despite the fact Giles might be a good negative predictor for Spike.

The correlation between the two users is near zero, so they would have little influence on each other in the recommendation process. It would though be conceivable to interpret positive ratings of Giles as perfect negatives for Spike. This fact, and many similar such cases, is however completely ignored with the correlation approach as it uses a model that is too generalized.

- Transitivity

Since two users can only be regarded as similar if they have items that overlap, this has the effect that users with no overlap are regarded as dissimilar in the correlation approach. However, just because two users have no overlap, doesn't necessarily mean that they are not like-minded. Can such like-mindedness still be detected? Consider the following example; user A correlates with user B ; user B correlates with user C ; since user A has no items in common with user B the two do not correlate. But one thing they do have in common is their similarity with user B , i.e.

, and this information could be used to establish a similarity between users A and C .

, and this information could be used to establish a similarity between users A and C .

Different ways of improving and extending primarily the correlation based similarity measures have been developed, some of the more widely known and used are the ones proposed by Breese [breese98empirical] and Herlocker [herlocker00understanding] . Breese suggests three different extensions, default voting , inverse user frequency and case amplification . Herlocker suggests the use of significance weighting .

- Default voting

Since the reliability of the correlation between two users increases with the number of items that are used for calculating the correlation, few co-rated items means that the reliability of the similarity is questionable. Instead of only using co-rated items, Breese suggests that the union over all items that two user have rated should be used. For each item in the union where one of the two users has a missing rating, a default rating is inserted. Breese suggests that the default value should be a neutral rating or a somewhat negative preference for the unrated item. It is important to realize that the basic idea of default voting only applies to the union of items rated by both users in question. However, they also suggest that a default value can be added to some additional items that neither one of the two user have rated but that they might have in common.

- Inverse user frequency

The extension is based on the idea that universally liked items are not as useful in capturing similarity as less common ones. Inverse user frequency for an item is calculated by taking the logarithm of the ratio between the total number of users and how many that have rated the item. This is analogous to the inverse document frequency typically used in information retrieval to reduce influence of common words when calculating document vector similarity. The ratings for each item is then multiplied by their calculated inverse user frequency when calculating similarity between users's rating vectors (this would not be suitable for correlation based approaches).

- Case amplification

Refers to a transform applied to the similarity. Positive similarities will be emphasized while negative will be punished. Breese uses a formula where positive similarities are left unmodified, and negative similarities are modified to lie closer to zero.

- Significance weighting

A method that devalues similarities that are based on too few co-rated items. It is based on the observation made by Herlocker that users that have few items in common but are highly correlated, usually turns out to be terrible predictors for each other. His experiments show that a minimum of 50 co-rated items is typically required for producing more accurate predictions, imposing a higher value on the minimum number of co-rated items doesn't have any significant effect on the accuracy. If two users have 50 or more items in common, their similarity is left unmodified, but if they have less than 50 co-rated items, the similarity is multiplied with the number of co-rated items divide by 50.

In particular significance weighting is also a useful extension for cosine based similarity measures. In [sarwar01itembased] the adjusted cosine similarity measure and significance weighting is used to determine item similarities based on user ratings, they report better results when using the adjusted cosine than when using the person correlation coefficient as a similarity measure.

Pearson's correlation coefficient

[resnick94grouplens] suggested (by use of an example) the usage of the Pearson correlation coefficient as a similarity measure between users item ratings.

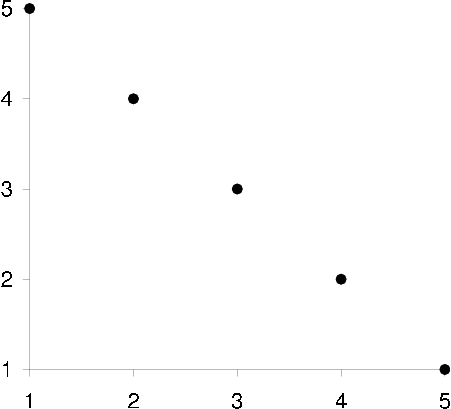

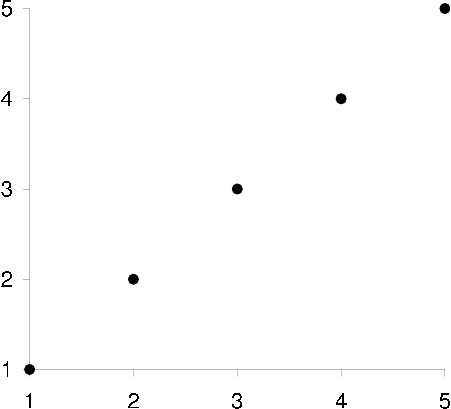

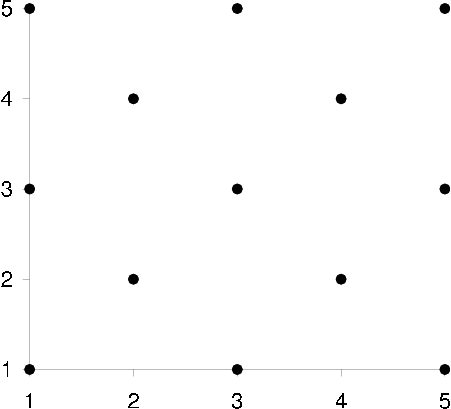

By studying how users ratings are distributed in a scatter plot it is determined whether a positive or negative linear correlation exists between the two users ratings [scatter plots] . As with any correlation based similarity measure only co-rated items can be considered (unless an extension such as default voting is also used).

scatter plot correlation negative Correlation: -1

scatter plot correlation positive Correlation: +1

scatter plot correlation zero Correlation: 0

scatter plots Scatter plots showing two users u (x-axis) and n (y-axis) correlation when assigning ratings on a discrete rating scale  to items. A -1 correlation can be interpreted as whenever user u rates something high user n rates it low, or whenever u rates something low n rates it high. A +1 correlation can be interpreted as whenever user u rates something high user n rates it high, or whenever u rates something low n rates it low. A 0 correlation simply means that whenever u rates something low, n might rate it low too or just as likely rate it high, no linear relationship exists in the scatter plot.

to items. A -1 correlation can be interpreted as whenever user u rates something high user n rates it low, or whenever u rates something low n rates it high. A +1 correlation can be interpreted as whenever user u rates something high user n rates it high, or whenever u rates something low n rates it low. A 0 correlation simply means that whenever u rates something low, n might rate it low too or just as likely rate it high, no linear relationship exists in the scatter plot.

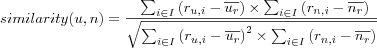

The correlation between two users u and n is calculated using the two user's respective row in the user item rating matrix (as previously described), but ignoring columns for items both users haven't rated. Since it is practical to not have to deal with missing values in the matrix, we will adopt a variation of the user item rating matrix model (in the next chapter a formal definition of this model will be given) which associates a set of ratings with each user. Let; ru, i denote the r ating on item i by user u ; ur denote the set of ratings given by user u . The similarity between users u and n can then be defined by their Pearson correlation as follows:

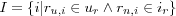

where I is the set of co-rated items, i.e.  .

.

Note that the mean ratings  and

and  for each user should be calculated over the set of co-rated items since the correlation formula only deals with co-rated items. Variations of the formula exist, particularly one form that does not require the calculation of user means is often used. The similarities defined in this manner will lie in the interval [-1, 1] where similarities near -1 mean negative correlation and similarities near +1 mean positive correlation. Values around zero indicate that no linear correlation existed between the two users, this doesn't necessarily mean the two users have dissimilar taste, however in lack of further analysis we must assume the users are dissimilar.

for each user should be calculated over the set of co-rated items since the correlation formula only deals with co-rated items. Variations of the formula exist, particularly one form that does not require the calculation of user means is often used. The similarities defined in this manner will lie in the interval [-1, 1] where similarities near -1 mean negative correlation and similarities near +1 mean positive correlation. Values around zero indicate that no linear correlation existed between the two users, this doesn't necessarily mean the two users have dissimilar taste, however in lack of further analysis we must assume the users are dissimilar.

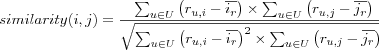

While the application of the Pearson correlation coefficient has been in terms of determining similarity between two users, a similar reasoning applies to finding the similary between two items. The correlation between two items is calculated using the two items's respective column in the user item rating matrix, but ignoring rows for users that haven't rated both items. To denote the set of ratings given to an item i the notation ir is used. The similarity between items i and j can the be defined by their Pearson correlation as follows:

where U is the set of co-rated items, i.e.  .

.

As can be seen the notation is the same with users substitued for items.

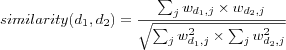

Cosine angle

In the user rating matrix terminology we can can view each user's row in the matrix as a rating vector, and use the cosine angle as a similarity measure between two users rating vectors. The cosine angle is measured in an N -dimensional space where N is the number of co-rated items between the two users.

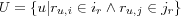

Using the previously adopted terminology the similarity between users u and n can be defined by the cosine angle between their ratings vectors as follows:

The similarities defined in this manner will lie in the interval [0, 1] , where zero means no similarity and one strong similarity.

Adjusted Cosine

When finding the similarity between two items based on users ratings on the items, [sarwar01itembased] found that using an adjusted version of the cosine angle when calculating similarities produced better results (the resulting predictions relying on the calculated similarities were better in terms of some evaluation metric) than using the cosine angle and the person correlation coefficient in the similarity calculation.

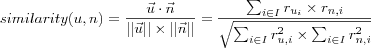

The similarity calculation based on adjusted cosine unlike basic cosine takes into consideration that users use different personal rating scales. The similarity between items i and n is now defined as:

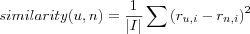

Mean squared difference

Used most notably in the Ringo system. Let |I| denote the number of co-rated items for user u and n . The similarity between users u and n is defined as:

Recommendations

Equally prevalent in CF research as similarity measures, is research into how to use the similarities to make actual recommendations. Two distinct types of recommendations are considered to exist, predictions and ranking lists (top N lists). A prediction is a value indicating to a user some kind of preference for an item, while a ranking list consists items the user will like the most sorted in descending order of preference, typically only the top N items in the ranking list are shown.

Prediction algorithms

A prediction is a numerical value pu, i that express the preference (interest, "how much something will be liked") of item i for a user u . The preference is usually encoded as a numeric value within some discrete or continuous scale, i.e. [1, 5] where 1 expresses dislike and 5 a strong liking. Several different prediction algorithms have been proposed in the literature. The most commonly used is [weighted deviation from mean] , commonly known as weighted deviation from mean , which was first proposed in the GroupLens System [resnick94grouplens] .

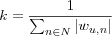

Prediction algorithms rely on a set of neighbors N that consists of users that for simplicity in this discussion will be assumed to have rated the active item i . Three common ways of computing predictions are then:

The constant k , sometimes called the "normalizing constant" is usually taken to be:

The normalizing constant doesn't cause the predictions to lie within the original rating scale the predictors rated items on, however it will make them lie "close enough". Predictions can be put into the original rating scale by clamping values (e.g. clamp all prediction above 5 to 5). In a sense the predictions are relative each other, a high predicted value indicates a stronger preference than a lower predicted value, however since predictions are often viewed "on their own" it does become necessary to clamp the predictions to a scale the user can understand.

Note the usage of wu, n where w stands for weight. The first prediction algorithm uses no such weight, it simply calculates the whole population's average rating for the active item i . The weight is introduced in the second prediction algorithm to discern between levels of user similarity, this weight is the similarity between the active user and each neighbor contributing to the prediction, which in this case means all users in the population that have rated the item. (Weights are always taken as some form of similarity between users, the term weight stems from the recommender systems histoy in information retrieval and filtering and will not be used beyond this chapter, instead the more obvious term similarity will be used.)

The first prediction algorithm [average rating] expresses the simplest case, the predicted value is just the average value for that item given by all users (because of this the name Item mean is sometimes used to refer to this prediction algorithm). This algorithm is often used as a baseline predictor when new prediction algorithms are tested.

The most common aggregation formula used is [weighted average rating] (which can be refered to as the Weighted average rating prediction algorithm), it weighs all user with respect to its similarity to the active user. The purpose of the weighting is to ensure that like-minded users for the active user will have more to say in the final prediction, this algorithm was used in for example the Ringo system [shardanand95social] .

However, it has been found that users use different rating scales when rating items, e.g. some users will be very restrictive about giving items top ratings, while other users may be more generous or have widly different definitions of what a top rating means and and rate many items they like with top ratings (perhaps two users have the opinions "great movie, I give it a two" and "terrible movie, I give it a two" about the same movie, which isn't unusual as the authors can testify). This means that the rating distribution between users will be skewed. Letting a user that rates many movies with the top rating predict for a user that is very restrictive about top grades may have a negative effect on prediction quality. In order to neutralize the negative effect of the skewed rating scales, [weighted deviation from mean] was introduced. The very basic idea is to start out with the active users mean rating, and then look at how the active user's neighbors deviate from their mean rating (because of this the prediction algorithm is often referd to as Weighted deviation from mean ). If the neighbors do not deviate from their mean rating, then neither should the active user. This prediction algorithm was used first in the GroupLens System [resnick94grouplens] .

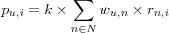

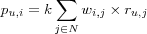

Common prediction algorithms used by item based CF have similarities with the previously presented prediction algorithms, however with some important differences. The neighborhood set N consists of items similar to the active item i . For discussion it will be assumed that N consists of all items similar to the active item.

A algorithm in the item based case that is similar to [average rating] would simply take the active users average rating on all items he has rated. However as that makes little sense, the items' degree of similarity is also accounted for.

A common algorithm for making predictions in item based CF is [weighted sum] (often referd to as Weighted sum ) (with e.g.  ).

).

Ranking algorithms

A ranking list, or more commonly a top N list since the ranking list typically consists of the N items (out of all available in the dataset) that the active user will like the most sorted in descending order of preference. Top N lists can be created both by simply sorting predictions, or by using algorithms specifically designed for top N list generation. Any recommendation technique making predictions can also sort the predictions to generate top N lists. Thus the following methods for generating top N lists are usually created when the underlying data is not ratings, rather transaction data, e.g. information on what items a user has purchased. However, there is nothing to prevent using rating, however it will be necessary for some parts of the algorithm to reduce the rating data to unary data, this means loosing information which might lead to worse results had predictions been sorted.

Two ways of producing top N ranking lists, when the underlying data is transaction data, are given in [karypis01evaluation] and are outlined below.

- Frequent item recommendations

This method creates a top N ranking list by first creating a set of items consisting of all items neighbors of the active user has purchased . Items that the active user has purchased are excluded from the set. The frequency of each item in the set is counted, i.e. it is counted how many of the neighbors purchased each item. The items are then sorted in descending order of frequency. The N most frequent items are then presented to the active user as a top N list.

- Similar item recommendations

This method creates a top N list by first creating a candidate set of the k most similar items for each of the items in the active users profile. The set consists only of distinct items and items already purchased by the active user are removed. For each item in the candidate set, its similarity to each of the items in the active users profile is computed and summed up. The N items in the candidate set are then sorted with respect to their summed similarity to the active users profile. The N items with the highest summed similarity are then presented to the active user as a top N list.

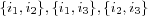

In [karypis04itembased] an interesting variation on their similar item recommendations method is proposed. Instead of only finding the k most similar items for each item in the active users profile, the k most similar items to all possible sets of items in the active user's profile are found. For example, suppose the active user has three items i1 , i2 and i3 in this profile, then the candidate set should include the k most similar items for not only items i1 , i2 and i3 , but also for the sets

and

and  . This requires a similarity measure that is able to determine similarity between one item and a set of items. They claim that this approach will discover items that can be of potential interest to the active user that otherwise wouldn't be found, i.e. the k most similar items to e.g. the set

. This requires a similarity measure that is able to determine similarity between one item and a set of items. They claim that this approach will discover items that can be of potential interest to the active user that otherwise wouldn't be found, i.e. the k most similar items to e.g. the set  might include items that are not in either of the individual items list of k most similar items. Techniques implementing this method of generating top N list require very large models of the k most similar items to be build, to reduce model size only item sets that occur frequently among users can be used. The notation frequent item sets is used by the data mining community in connection with association rules as defined in [agrawal93mining] .

might include items that are not in either of the individual items list of k most similar items. Techniques implementing this method of generating top N list require very large models of the k most similar items to be build, to reduce model size only item sets that occur frequently among users can be used. The notation frequent item sets is used by the data mining community in connection with association rules as defined in [agrawal93mining] .

Content Based Filtering

Information filtering and content based filtering refers to the same paradigm, filtering items by their content. However information filtering is most often used in connection with text documents, while content based filtering allows for a wider definition of content. Pure content based filtering, as defined in [balabanovic97fab] , is when recommendations are made for a user based only on a profile built up by analyzing the content of items that the user has rated or otherwise shown interest in. We will be using the term content based filtering since we will not constrain our self to text documents only, that is, we will use any content that after some preprocessing can be used in the filtering process. From the definition we can identify two important steps in content based filtering, the need for both a technique to represent the items and for a technique to create the user profiles.

Many of the techniques that have been developed and used in text retrieval and text filtering have been adopted by the content based approach [oard97stateoftheart] . One of the more popular techniques is the vector space model and especially the tf-idf weighting scheme for representing the content of the text documents. Popular approaches for creating user profiles are techniques found in the machine learning field. One such technique is the use of a classification algorithm that can learn a user profile that it can use to distinguish between items that are liked and disliked by the user.

So not surprisingly, recommender systems that use the content based approach are often found in domains where the content has some similarity to plain text documents. For example Syskill & Webert [pazzani96syskill] is a content based recommender system for webpages. Users rate webpages on a three point scale, the system can then recommend new WebPages or create a search query for the user to be used in a search engine. Users of the system are presented with an index page that links to several manually created topic webpages that contains hundreds of links to pages within that topic. The system creates user profiles for each topic rather than user profiles for all topics combined. Based on the user profiles, some of the links on the topic pages will be associated with a symbol (e.g. thumbs up, thumbs down, smileys, etc.) that symbolizes how much the user will like the page that is linked to.

In the Syskill & Webert recommender system each webpage is represented as a boolean feature vector consisting of the k most informative words for the topic it belongs to. The boolean values for each feature indicates if a word is present or absent in the web page. They chose k to be 128. Words to use as features in the feature vectors differ for each topic, but are the same for all webpage's belonging to the same topic. The words within each topic are chosen based on how much expected information gain the presence or absence of the word will give when trying to classify a webpage for a user. A learning algorithm is used to classify unrated webpage's for users, the learning algorithm analyzes the content of all the pages a user has rated and by this process learns the user's profile. Five different types of learning algorithms were tested by Pazzani et. al, Bayesian classifiers, nearest neighbor algorithms, decision trees, neural nets and tf-idf as described in the vector space model. The algorithms show different results over different topics, but the overall best performance is given by the Bayesian classifier. All algorithms show results that almost always beats the baseline algorithm, which is just guessing what pages a user would like.

Content based filtering systems based on item attributes differs in some aspects from keyword based systems like Syskill & Webert. Extracting product content is a straight forward process, the attributes of the product is usually well structured, such as for movies where the content consists of attributes like the movie's director, lead actress/actresses, genre etc. However, the challenge is to select only the most important attributes. The key issue here is: what defines an attribute as important? Since importance differs from product to product, no standardized ways, like for example the tf-idf weighting scheme used with text documents in the vector space model, exists for product attributes (that we are aware of). Choosing the wrong attributes can have a great impact on the performance of the recommendation technique, so the choice of attributes is usually done manually either by some domain experts or by empirical tests.

In [mellville02conentboosted] they represent the content of a movie as a set of "slots" containing attributes of the following type: movie title, director, cast, genre, plot summary, plot keywords, user comments, external reviews, newsgroup reviews and awards. A movie will in this case be represented as a vector of bags of words . A naive Bayesian classifier is then used to create user profiles. They report almost equal performance between their implementation of a user based collaborative filtering technique and the content based filtering technique.

A way of making movie recommendations with a minimum of knowledge about the users is described in [fleischman03recommendations] . Instead of representing and learning user profiles from the users preferences about movies, the recommender system only uses one movie as a seed movie to base its recommendations on. For example, suppose the active user has a favorite movie and wants to find out if there exists any similar movies. By using the favorite movie as a seed movie, the recommender system can make some recommendations that are based on the similarity with the seed movie. They propose two different techniques for this and the only difference between them is how the content of the movies is represented. In the first technique, they only use the plot summaries of the movies and represent the movies with a binary vector, where 1 and 0 represents the presence and absence of a word respectively. The size of the vector is the same as the total size of the used vocabulary. Two movies are found similar by calculating their cosine similarity as described in the vector space model. The second technique also uses the cosine similarity for finding similar movies, but the representation of the movies is different. In this case, each movie is represented by a vector that contains the movies cosine similarities to different movie genres. Each genre is represented as a vector of keywords that are weighted by how indicative they are of the particular genre. The keywords were taken from the Internet Movie database (IMDb)(*) . Thus, the first technique recommendations movies based on how well two movie's plot summaries match, and the second one recommends movies from their shared similarity and dissimilarity to particular movie genres. Their results shows that no conclusions can be made whatsoever about the performance of their techniques, for example, they compared the top five recommendations from their techniques against the output from IMDb on one particular movie and none of them recommended the same movies.

Vector Space Model

The vector space model is described in [salton83introduction] as a vector matching operation for retrieving documents from a large text collection. The user formulates a search query consisting of words that best describes his information need and this query is then matched against all documents in the text collection. The matching operation uses a similarity formula to determine which documents best matches the search query and then retrieves those documents for the user.

In this model, a document (and a search query) is represented by a vector of weighted keywords (terms) extracted from the documents [salton88termweighting] . The model is also popularly called the bag of words representation since the words are stored in a unordered structure that does not retain any term relation. For example, terms appearing together in a document can have some special meaning, but that will be lost by this kind of representation. The weights represent the importance of the keywords in the document as well as in the entire collection of documents. Since each document vector is represented in a vector space that is spanned by the number of terms, this model can be viewed as a term document matrix [term document matrix] , where rows are documents and the columns are all (or some of) the terms that appear in the document collection.

| t1 | t2 | ... | tj | |

| d1 | w11 | w12 | ... | w1j |

| d2 | w21 | w22 | ... | w2j |

| ... | ... | ... | ... | ... |

| di | wi1 | wi2 | ... | wij |

term document matrix Term document matrix. The rows represent documents and the columns terms that appear in the document collection, but not necessarily in all documents. The cells contains weights indicating the importance of each term in each document.

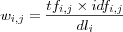

If we use wi, j to denote the weight for term j in document i , the document vector can be expressed as:

This model has been used for a long time as a basis for successful algorithms for ranking, filtering and clustering documents, see for example [baeza-yates99modern] .

Three factors that must be considered when calculating the weights are term frequency , inverse document frequency and normalization . After all the weights have been calculated, similarity between two documents d1 and d2 can be calculated using for example the cosine angle:

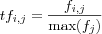

The term frequency is just a count of how many times a term occurs in a document. If a term occurs many times in a document, then it is considered important for that particular document. The term frequency f for term j in document i , i.e. the number of occurrences of term j in document i , is denoted as fi, j . This is sometimes referred to as the terms local frequency.

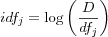

The inverse document frequency (IDF) identifies terms that occurs only in a few documents. Such terms are considered to be of more importance than terms that are prevalent in the whole document collection. Terms occurring in the whole document collection make it hard to distinguish between documents and if used causes all documents to be retrieved which naturally affects the search precision. The IDF factor is usually referred to as the terms global frequency. The IDF factor for a term is inversely proportional to the number of documents that the term occurs in, since when the document frequency for a term increases, its importance decreases. The frequency f for term j in document d is denoted as dfj and the inverse document frequency for term k in document d is denoted as idfj . The inverse document frequency ifj is inversely proportional to the document frequency dfj , i.e.:

and varies with the document frequency dfj . The idfj for a term j is usually taken as the logarithm of the ratio between the total number of documents D and the document frequency, i.e.:

The logarithm (which can be taken to any convenient base) is just there for smoothing out large weight values. Thus we get:

The term frequency fi, j and the inverse document frequency idfj can be combined as  to obtain a weight that accounts both for terms occurrences within a document and within the whole document collection. However, it is necessary to perform some normalization in order to avoid problems related to keyword spamming and bias towards longer document. Two types of normalization that is suggested is normalized term frequencies and normalized document lengths .

to obtain a weight that accounts both for terms occurrences within a document and within the whole document collection. However, it is necessary to perform some normalization in order to avoid problems related to keyword spamming and bias towards longer document. Two types of normalization that is suggested is normalized term frequencies and normalized document lengths .

Since higher term frequencies fi, j results in a larger weight value than for terms with a lesser frequency models are vulnerable to keyword spamming, i.e. a technique where terms are intentionally repeated in a document for the purpose of generating larger weight values and by that way improving the chances of the document being retrieved. One way to avoid this is to calculate the normalized term frequency . The normalized term frequency of term j in document i is denoted tfi, j and is usually calculated by dividing the frequency of the term j with the maximum term frequency in document i denoted max(fi) , i.e.:

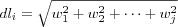

Normalized document lengths are used since longer documents usually use the same term more often, resulting in larger term frequency factors, and using more different terms as opposite to shorter documents. This means that longer documents will have some advantages over shorter ones when it comes to calculating the similarity between documents. To compensate for this cosine normalization can be used as a way of imposing penalty on longer documents, it is computed by dividing every document vector by its Euclidian length. The Euclidian length of a document i is defined as:

Terms that should receive the highest weight are those that have high term frequency and low overall document collection frequencies. Salton [salton88termweighting] refers to this property as term discrimination since this suggest that the best terms are those that can be used to distinguish certain documents from the remainder of the collection. This is achieved by multiplying the normalized term frequency with its inverse document frequency  , and then normalizing it to achieve the final weight for the term j in document i :

, and then normalizing it to achieve the final weight for the term j in document i :

Hybrid Filters

Both collaborative filtering and content based filtering have their strengths and weaknesses, hybrid filters are an attempt to combine the strengths of both techniques in order to minimize their respective weaknesses. There are many examples of recommender systems based on hybrid filters.

- FAB

A content based collaborative filtering system for webpage's [balabanovic97fab] . In FAB webpage's are represented by the words that receives the highest tf-idf weight and the user profiles are represented by an tf-idf vector that is the average of the webpage's that are highly rated by the user. The user can then receive recommendations from similar users using the collaborative approach or by direct comparison between a webpage and a user's profile. Their results show that the FAB system makes better recommendations compared to recommendations that are randomly selected, human selected "cool sites of the day" or pages best matching an average of all user profiles in the system.

- Syskill & Webert

Pazzini [pazzani99framework] extended the content based technique that was used in his Syskill & Webert [pazzani96syskill] recommender system with collaborative filtering techniques. He refers to this new technique as collaboration via content . Webpage's are represented by the 128 most informative keywords for the class they belong to. A content based approach different from the one presented in [pazzani96syskill] is used both on its own and in combination with a collaborative filtering technique. In the content based approach a user profile is learned by first applying an algorithm that assigns weight values to each informative word in a webpage a user has rated. Weights are initially set to 1, the weight values for each webpage are then summed up and if the sum is above a threshold value and the user liked the webpage, the weights are doubled, otherwise they are divided by two. Training stops after 10 iterations over all pages or when each rated webpage is correctly classified (i.e. weights for words on all positively rated pages sum over the threshold, and weights for words on all negatively rated pages have a sum under the threshold). Webpage's are then recommended by applying the user's weight values on the words that occurs in each webpage and summing up the total weight for each webpage, the webpage's with the highest sum are recommended to the user. In the collaboration via content technique the content based user profiles, consisting of the user's word weights, are used instead of user profiles consisting of ratings, in the same manner as in standard collaborative filtering. Their experimental results show that when users have very few webpage's in common, collaboration via content has a better precision than standard collaborative filtering. But as soon as users get more and more webpage's in common, collaborative filtering performs almost equally well. Precision was measured based on the top three webpage's that was recommended to a user.

- P-TANGO

[claypool99combining] is a recommender system for news articles from an online newspaper that uses both the content based approach and the collaborative approach in the recommendation process. It's not a hybrid system in the sense that the two approaches depend on each other, instead they are implemented as two separate modules that are independent of each other. The recommendations from the two approaches are instead weighted and combined to form a final recommendation. One advantages over hybrid systems where both approaches are tightly coupled and deeply dependent on each other, is that the system can choose which approach should have more confidence during different circumstances. For example, when few people have accessed a news article, the content based recommendation is weighted higher but as soon as more and more people is showing interest in the article, the recommendations based on the collaborative approach take over. Also, improvements of the system is easier, since whenever someone comes up with a new and improved way of making either collaborative or content based recommendations, these are easily incorporated into the system. The user profiles in the content based approach consists of keywords that are matched against item profiles that consists of extracted words from news articles. The keywords in the user profiles are either taken from articles the users have rated highly or are keywords the users have marked as important. Their experimental results show that the combined recommendations are a bit more accurate than either of the separate ones.

- Rule based using Ripper

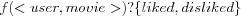

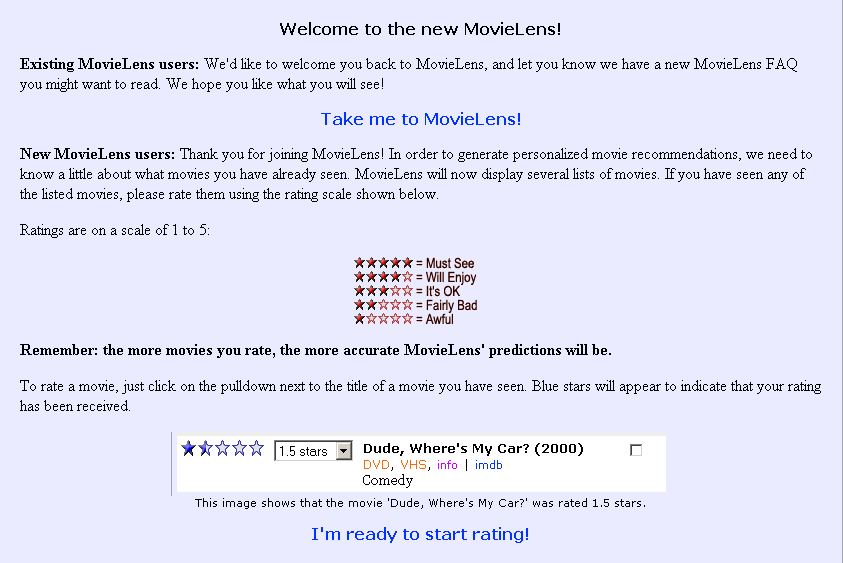

In [basu98recommendation] they treated the task of recommending movies to users as a classification problem. They saw the recommendation process as the problem of learning a function

that takes as input a user and a movie and produces an output that tells whether the user will like or dislike the movie. For this task, they chose to use an inductive learning system called Ripper. Ripper is able to learn rules from set-valued attributes, in this case by taking as input a tuple

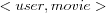

that takes as input a user and a movie and produces an output that tells whether the user will like or dislike the movie. For this task, they chose to use an inductive learning system called Ripper. Ripper is able to learn rules from set-valued attributes, in this case by taking as input a tuple  consisting of two set-valued attributes user and movie. They created tuples consisting of what they call collaborative features (user's ratings on movies) and content features (information available on movies, they used over 30 different attributes). Using for example collaborative features the <user, movie> tuple would contain for the user attribute which movies the user likes, and for the movie attribute which users that likes the movie (e.g.

consisting of two set-valued attributes user and movie. They created tuples consisting of what they call collaborative features (user's ratings on movies) and content features (information available on movies, they used over 30 different attributes). Using for example collaborative features the <user, movie> tuple would contain for the user attribute which movies the user likes, and for the movie attribute which users that likes the movie (e.g.  where movie1 and movie2 are two movies liked by the user in question, and user1 , user2 and user3 are three other users that like the movie in question). They also used what they call hybrid features, e.g. a movie was represented using users that like a particular genre. Each such

where movie1 and movie2 are two movies liked by the user in question, and user1 , user2 and user3 are three other users that like the movie in question). They also used what they call hybrid features, e.g. a movie was represented using users that like a particular genre. Each such  tuple is then labeled by whether or not the movie was liked by the user. Given such tuples the Ripper can be trained to create rules for every user, which can be used in the recommendation process. Example of an interpretation of a rule for a user that like action movies can be " if action is present among movies that the user likes then predict user likes movie" . They report that their approach performs reasonably well compared to the collaborative approach on the same data. However, this is only achieved with the combination of a great deal of manual work in selecting and refining the attributes and at a low level of recall.

tuple is then labeled by whether or not the movie was liked by the user. Given such tuples the Ripper can be trained to create rules for every user, which can be used in the recommendation process. Example of an interpretation of a rule for a user that like action movies can be " if action is present among movies that the user likes then predict user likes movie" . They report that their approach performs reasonably well compared to the collaborative approach on the same data. However, this is only achieved with the combination of a great deal of manual work in selecting and refining the attributes and at a low level of recall.- CF with content based filterbots

Sarwar et. al uses in [sarwar98using] what they refer to as filterbots, software agents whose only purpose is to give content based ratings on new items that arrives into the system. Since the filterbots are used as recommenders for those users that correlate with them, new items will be available for recommendation much faster then if they had to wait for real users to come along and rate them. They tested their filterbots in the GroupLens system that recommends articles from a Usenet news server. One of the filterbots they implemented was a SpellCheckerBot, whose sole purpose was to run a spell checking algorithm on every new article that arrived to the Usenet news server. The final rating on the article was then decided based on the number of misspelled words. Other filterbots that they tried based their ratings on the length of the article and number of quotes in the articles. They report that a simple filterbot like the spell checker can improve both the coverage (number of items available for recommendation among all items) and the accuracy of the given recommendations. Their results however show that filterbots are sensitive to which domain they operate over, e.g. some did well in newsgroups about humor but some did not etc.

- Content-boosted CF

In [mellville02conentboosted] they implement two hybrid systems, the first one combined the outcome from a pure content based filtering technique with the outcome from a user based collaborative filtering technique by taking the average of the ratings that are generated. Their other system is called content-boosted collaborative filtering (CBCF). This system first creates pseudo user-ratings vectors for every user by letting a content based filter (as previously described) predict ratings for every unrated item. These pseudo user-ratings vectors are then put together to give a dense pseudo-user ratings matrix. Collaborative filtering is then performed by the use of this matrix. Similarity between two users are calculated based on the pseudo ratings matrix, in the prediction process two additional weights are added; the calculated accuracy of the pseudo user-ratings vector for the active user and a self-weight that can give extra importance to the active users pseudo self, since he is used as a neighbor. Their results shows that the CBCF performs best, followed by pure CF, combined CF and CB and last pure CB. Although, the difference between the CBCF and the pure CB is very small.

- Combining attribute and rating similarity